Every year, about 185,000 people undergo an amputation in the United States. Bionic prosthetic limbs for amputees who have lost their hands or part of their arms have come a long way, but it's hard to replicate grasping and holding objects the way a regular hand can. Current prostheses work by reading the myoelectric signals—electrical activity of the muscles recorded from the surface of the stump—but don't always work well for grasping motions, which require varied use of force in addition to opening and closing fingers.

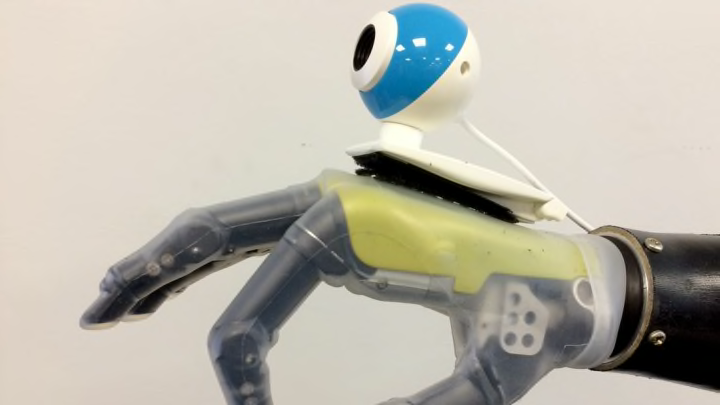

Now, however, researchers at Newcastle University in the UK have developed a trial bionic hand that "sees" with the help of a camera, allowing its wearer to reach for and grasp objects fluidly, without having to put much thought into it. Their results were published in the Journal of Neural Engineering.

The research team, co-led by Ghazal Ghazaei, a Ph.D. student at Newcastle University, and Kianoush Nazarpour, a senior lecturer in biomedical engineering, used a machine learning algorithm known as “deep learning,” in which a computer system can learn and classify patterns when provided with a large amount of training—in this case, they provided the computer with visual patterns. The kind of deep learning system they used, known as a convolutional neural network, or CNN, learns better the more data is provided to it.

“After many iterations, the network learns what features to extract from each image to be able to classify a new object and provide the appropriate grasp for it,” Ghazaei tells Mental Floss.

TRAINING BY LIBRARIES OF OBJECTS

They first trained the CNN on 473 common objects from a database known as the Amsterdam Library of Objects (ALOI), each of which had been photographed 72 times from different angles and orientations, and in different lighting. They then labeled the images into four grasp types: palm wrist natural (as when picking up a cup); palm wrist pronated (such as picking up the TV remote); tripod (thumb and two fingers), and pinch (thumb and first finger). For example, "a screw would be classified as a pinch grasp type” of object, Ghazaei says.

To be able to observe the CNN training in real time, they then created a smaller, secondary library of 71 objects from the list, photographed each of these 72 times, and then showed the images to the CNN. (The researchers are also adapting this smaller library to create their own grasp library of everyday objects to refine the learning system.) Eventually the computer learns which grasp it needs to use to pick up each object.

To test the prosthetic with participants, they put two transradial (through the forearm or below the elbow) amputees through six trials while wearing the device. In each trial, the experimenter placed a series of 24 objects at a standard distance on the table in front of the participant. For each object, “the user aims for an object and points the hand toward it, so the camera sees the object. The camera is triggered and a snapshot is taken and given to our algorithm. The algorithm then suggests a grasp type,” Ghazaei explains.

The hand automatically assumes the shape of the chosen grasp type, and helps the user pick up the object. The camera is activated by the user’s aim, and it is measured by the user’s electromyogram (EMG) signals in real time. Ghazaei says the computer-driven prosthetic is “more user friendly” than conventional prosthetic hands, because it takes the effort of determining the grasp type out of the equation.

LEARNING THROUGH ERROR CORRECTION

The six trials were broken into different conditions aimed at training the prosthetic. In the first two trials, the subjects got a lot of visual feedback from the system, including being able to see the snapshot the CNN took. In the third and fourth trials, the prosthetic only received raw EMG signals or the control signals. In the fifth and sixth, the subjects had no computer-based visual feedback at all, but in the sixth, they could reject the grasp identified by the hand if it was the wrong one to use by re-aiming the webcam at the object to take a new picture. “This allowed the CNN structure to classify the new image and identify the correct grasp,” Ghazaei says.

For all trials, the subjects were able to use the prosthetic to grasp an object 73 percent of the time. However, in the sixth test, when they had the opportunity to correct an error, their performances rose to 79 and 86 percent.

Though the project is currently only in prototyping phase right now, the team has been given clearance from the UK's National Health Service to scale up the study with a larger number of participants, which they hope will expand the CNN’s ability to learn and correct itself.

“Due to the relatively low cost associated with the design, it has the potential to be implemented soon,” Ghazaei says.