By Harold Maass

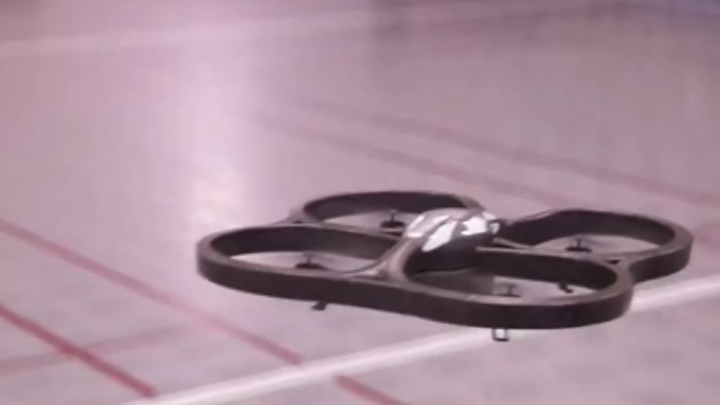

Call it the quadcopter mind meld. A group of biomedical engineers at the University of Minnesota has developed a novel way to fly a robotic helicopter, using their own brains as the remote control. The team's research was published Tuesday in the Journal of Neural Engineering.

They're not the first to try such a trick. A Duke neuroscientist implanted electrodes into a monkey a few years ago to allow it to control a walking robot. But this research team managed its feat using an EEG cap laden with 64 electrodes, which can detect electric currents produced by neurons in the brain's motor cortex. That allows the wearer to control the aircraft by simply thinking about a series of hand gestures.

The subjects simply watched where the quadcopter was going on a computer screen, and clenched their fists to navigate it — left to go left, right to go right, both to rise. The commands were sent to the craft via WiFi, and the five subjects managed to pilot the helicopter to its target 66 percent of the time.

Predictably, tech-savvy reviewers found the idea of controlling a quadcopter by thought alone to be pretty cool. George Dvorsky at i09 said it was a truly remarkable accomplishment:

First, there's the order of complexity to consider. This quadcopter has to be navigated across three different dimensions... Incredibly, the copter can be seen zipping around the room as it flies through various sets of rings. It's wild to think that it’s being navigated by an external, human mind.

Second, the achievement offers yet another example of the potential for remote presence. Thought-controlled interfaces will not only allow people to move objects on a computer screen, or devices attached to themselves — but also external devices with capacities that significantly exceed our own. In this case, a flying toy. In future, we can expect to see remote presence technologies applied to even more powerful robotic devices, further blurring the boundary that separates our body from the environment. [i09]

Previous leaps forward with brain-computer interfaces have involved transmitting a command to a machine — say, a robotic arm — and triggering a pre-programmed task that would then be carried out to completion. Rachel Nuwer at Popular Mechanics said that explains why the researchers think their work could open new possibilities for those with physical limitations such as paralysis.

"This new system allows users to make asynchronous (real-time) decisions and change course in midstream rather than having to wait until the prior task is completed," Nuwer says. "In the real world, this would allow a person to start walking forward to the bathroom, for example, but then change his mind and head left into the kitchen."

The aim, said Leo Mirani at Quartz, is to help "the paralyzed to restore their 'autonomy of world exploration.' For healthy users, the possibilities are boundless."

More from The Week...

Forget Cronuts: 7 Crazier Ideas for Food Hybrids

*

Solving the Mystery of PRISM

*

This is what Antarctica Looks Like Without Ice