What’s in a head? While many appreciate the aesthetics of these things that sit atop the body, a head is capable of much more than just looking good. In fact, a few heads—whether human or animal, ancient artifact or geological feature—have actually changed the course of history … some for good, some for ill, and some in very surprising ways that affect us every day. Here are just a few of them.

1. L'Inconnue de la Seine

The most popular tale behind L’Inconnue de la Seine, or The Unknown Woman of the Seine, is that in the late 19th century, the body of a 16-year-old girl was pulled from Paris’s Seine River. No one knew what had happened to her—authorities assumed she had died by suicide—and no one came forward to claim her body when it was displayed (per the practice of the day) at the Paris Mortuary. But there was something about her peaceful, half-smiling face that the mortuary attendant couldn’t get out of his mind, so he had a plaster death mask made. It would soon take on a life of its own: Molds of her face were offered for sale, first locally, and then en masse. It wasn’t long before L'Inconnue de la Seine—a name given to her in 1926, and whom Albert Camus dubbed “the drowned Mona Lisa”—was entrancing influential writers and artists, among them Vladimir Nabokov, who penned a poem about her, and Man Ray, who photographed her "death mask" (though it’s unlikely actually a death mask—when the BBC asked experts, they felt the face was too healthy for it to have come from a dead body, and was more likely taken from a living model).

More than half a century after L’Inconnue’s supposed drowning, a doctor named Peter Safar was developing CPR, and he wanted a doll for people to practice on. He approached Norwegian toymaker Åsmund S. Lærdal to make the manikin. Lærdal was game—just a few years earlier, he had pulled his nearly-lifeless 2-year-old son out of the water and resuscitated him; he understood how game-changing a training tool of this kind would be. According to the Lærdal website, “He believed that if such a manikin was life-sized and extremely realistic in appearance, students would be better motivated to learn this lifesaving procedure.” And so he chose L’Inconnue de la Seine to be the face of the manikin, which he named Resusci Anne. The company says that 500 million people worldwide have trained on the manikin, saving an estimated 2.5 million lives.

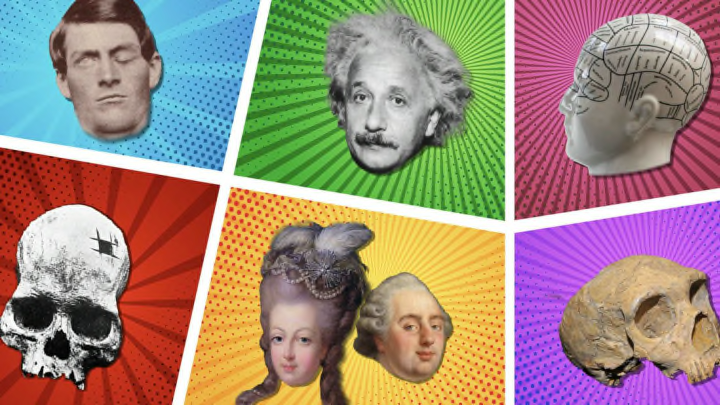

2. Louis XVI and Marie Antoinette

The imprisonment and eventual beheadings of the king and queen of France during the French Revolution were part of years of social and political upheaval in the country. But their heads changed history in stranger ways, too.

It's said that after their trips to the guillotine nine months apart in 1793, Louis XVI and Marie Antoinette’s death masks were sculpted in wax by an artist named Marie Grosholtz. (Whether that’s true or later mythmaking, Grosholtz definitely modeled many victims of the guillotine.) She would go on to marry an engineer named François Tussaud, becoming known as Madame Tussaud. Eventually, she moved to London, where she would set up a waxworks on Baker Street that would become known for its "Chamber of Horrors," featuring what was said to be the very blade that executed 22,000 people—including Marie Antoinette and Louis XVI. Then, around 1865, the heads of the king and queen themselves appeared in the display. While said to be modeled from their decapitated heads, Tussaud biographers view that as unlikely; as Kate Berridge notes, the masks appeared 15 years after Tussaud's death and that, compared to sketches of Marie Antoinette on the way to the guillotine, “the wax queen looks remarkably well—more like someone catching up with her beauty sleep than someone recently decapitated.” Instead, it’s possible they were life masks later turned into death masks.

Whether the heads are genuine or later marketing, the story would become an integral part of the Madame Tussauds legend—a legend that has since expanded to more than 20 Madame Tussauds locations around the world that rake in millions of dollars each year.

3. Johann Friedrich Blumenbach’s Caucasian Skull

Johann Friedrich Blumenbach, a German doctor and anthropologist working in the 18th and 19th centuries, devoted many years to measuring skulls and, on the basis of his analysis, concluded that there were five families in mankind: Caucasian, Mongolian, Malayan, Ethiopian, and American. One, a female skull that originated in the Caucasus Mountains, was, in his opinion, the “most handsome and becoming” of his collection. As Isabel Wilkerson writes in Caste: The Origins of Our Discontents, because he thought that skull to be the most beautiful, “he gave the group to which he belonged, the Europeans, the same name as the region that had produced it. That is how people now identified as white got the scientific-sounding yet random name Caucasian.”

In categorizing humanity in this way, Blumenbach helped to create the concept of race, a social construct that has no biological basis (all humans are, in fact, 99.9 percent genetically identical to each other). In 1850, a decade after Blumenbach’s death, Dr. Robert Latham declared in The Natural History of the Varieties of Man that “Never has a single head done more harm to science than was done in the way of posthumous mischief, by the head of this well-shaped female from Georgia.”

4. Oxford Museum’s Dodo Head

Much mystery surrounds the dodo: The birds have been extinct since some time in the 1600s, and remains beyond subfossils are scarce. The dodo head at the Oxford University Museum of Natural History is the only specimen with soft tissue, and scientists have been studying it in earnest since the 19th century.

Around that time, casts of the head were sent to institutions around the world, and it was featured in an 1848 book that raised the animal’s profile, which may have encouraged scientists to head to Mauritius to pick up the subfossils seen in museums today. More recently, the specimen has helped increase what we understand about the bird, from “what the dodo would have looked like, what it may have eaten, where it fits in with the bird evolutionary tree, island biogeography and of course, extinction,” Mark Carnall, one of the collections managers at the museum, told Mental Floss in 2018. (DNA taken from leg specimens, meanwhile, revealed that the dodo’s closest living relative is the Nicobar pigeon.) Chances are, we’ll be learning from the dodo head for years to come.

5. Phineas Gage

On September 13, 1848, a Vermont railroad construction foreman named Phineas Gage was clearing rocks with explosives when he accidentally ignited some gunpowder with a tamping iron. The explosion sent the 3.5-foot-long, 1.25-inch-diameter, 13-pound rod through Gage’s left cheek, behind his left eye, and out through the top of the skull, landing about 30 yards away. It was, according to a local paper, “actually greased with the matter of the brain.”

Gage survived, and was actually conscious and able to speak post-accident (he even walked up the stairs of his boarding house on his own, according to news reports). His survival was miraculous—and would be even by today’s standards. As such, he was of great interest to doctors of his day; Dr. John Martyn Harlow began attending to Gage shortly after the accident, and continued to observe him for many years.

The incident had left Gage blind in his left eye, though he eventually recovered enough to return to work after a brush with infection. However, his co-workers reported that upon his return, he seemed like a different person.

As Dr. Andrew Larner and Dr. John Paul Leach write in Advances in Clinical Neuroscience and Rehabilitation [PDF], according Harlow, Gage—who had previously been responsible and efficient—“became irreverent, capricious, profane and irresponsible, and showed defects in rational decision-making and the processing of emotion, such that his employers refused to return him to his former position.” According to Malcolm Macmillan, author of An Odd Kind of Fame: Stories of Phineas Gage, this change in behavior only lasted a couple of years—Gage would eventually relocate to South America, where he became a stagecoach driver—a job that required focus and planning.

Gage, who died in 1860, kept the tamping iron with him for much of his life. It now sits with his skull at Harvard Medical School’s Warren Anatomical Museum.

As time passed, Gage’s case became, in the words of Macmillan, “the standard against which other injuries to the brain were judged.” Modern scientists still study his skull for insight into how his brain might have been damaged.

According to Warren Anatomical Museum curator Dominic Hall, how we’ve viewed Gage has changed over time. “In 1848, he was seen as a triumph of human survival,” Hall told The Harvard Gazette. “Then, he becomes the textbook case for post-traumatic personality change. Recently, people interpret him as having found a form of independence and social recovery, which he didn’t get credit for 15 years ago … By continually reflecting on his case, it allows us to change how we reflect on the human brain and how we interact with our historical understanding of neuroscience.”

6. The African Masks that Influenced Modern Art

People who have admired Pablo Picasso’s Les Demoiselles d’Avignon might wonder how the artist came up with the striking geometric faces of his models. They might conclude that they were wholly the invention of the artist—but in reality, Picasso was heavily influenced by masks created by the Fang people of Gabon, among artists from other African nations and Oceania. “After Matisse showed Picasso African art for the first time,” artist Yinka Shonibare told The Guardian, “it changed the history of modern art.”

Art history legend has it that modernist artists called the Fauves “discovered” statuettes from Central and West Africa, as well as the Fang masks, in the early 1900s. According to Joshua I. Cohen in The Black Art Renaissance, these European artists considered most works coming out of Africa to be primitive—either because they were two-dimensional, and “advanced” art was three-dimensional; or because the three-dimensional art that was being created wasn’t naturalistic. But they found the Fang masks compelling nonetheless, and artists like Matisse, André Derain, Maurice de Vlaminck, and (though he wasn't usually listed as a Fauve) Picasso appropriated the style in their own works.

It should go without saying, but just because the art didn’t meet European expectations doesn’t mean it was primitive—in fact, it was far from it. “Herein lies the paradox of ‘primitivism,’” Cohen writes in The Black Art Renaissance. “It accurately reflects a Eurocentric pattern of classifying innumerable nonclassical traditions as ‘primitive,’ yet its totalizing view perpetuates the same pattern by obfuscating ways in which artists drew formal lessons from specific sculptural objects.” According to Cohen, the Fang masks may have been created in reaction to the violent colonialism rocking Gabon, and some of them were actually created explicitly for export.

Artifacts like these masks not only directly inspired Picasso’s African Period, which lasted from 1907 to 1909, but also, he would later say, helped him understand “that this was the true meaning of painting. Painting is not an esthetic process; it’s a form of magic which is interposed between the hostile universe and ourselves, a means of seizing power, of imposing form on our fears as on our desires. The day I understood that, I knew that I had found my way.”

Once Picasso began using African influences in his art, others followed. “You’ve had the whole kind of modernist temple, so-called high art, built on the back of the art of Africa,” Shonibare says. “But that’s never really confronted and acknowledged.”

7. Albert Einstein

During his lifetime, Albert Einstein’s incredible brain gave us the theories of special and general relativity, E=mc2, a design for a green refrigerator, and more. The physicist and Nobel Prize winner knew that people would want to study his brain after he died, but Einstein wasn’t interested in hero worship. He asked for his remains to be cremated.

Thomas Harvey—the pathologist who was on call when Einstein passed away from an aortic aneurysm in New Jersey on April 18, 1955—ignored those instructions. He removed the scientist’s brain, getting permission from one of Einstein’s kids after the fact, “with the now-familiar stipulation that any investigation would be conducted solely in the interest of science, and that any results would be published in reputable scientific journals,” Brian Burrell writes in his book Postcards from the Brain Museum.

Harvey eventually had the organ cut into 240 slices and preserved them; they came with him when he moved to Kansas, where he kept them in a box under a beer cooler. After taking a job in a factory in Lawrence, he became friends with Beat poet William Burroughs. “The two men routinely met for drinks on Burroughs's front porch. Harvey would tell stories about the brain, about cutting off chunks to send to researchers around the world. Burroughs, in turn, would boast to visitors that he could have a piece of Einstein any time he wanted,” Burrell writes. Harvey returned to Princeton in the 1990s, and the brain later took a trip from New Jersey to California in the trunk of his car (Harvey was going to meet Einstein’s granddaughter).

All the while, Harvey tried to get scientists to study the brain, and a few papers were eventually published in the ‘80s and ‘90s, all of which found supposed differences in Einstein’s brain that would account for his extreme intelligence. But many have cast doubt on this research. As Virginia Hughes pointed out in National Geographic in 2014, “The underlying problem in all of the studies is that they set out to compare a category made up of one person, an N of 1, with a nebulous category of ‘not this person’ and an N of more than 1.” Further, she notes that it’s impossible to attribute Einstein’s intelligence or special skills to the features of his brain.

In fact, according to William Kremer at the BBC, many of the researchers Harvey lent Einstein’s brain to “found it to be no different from normal, non-genius brains.” In analyzing the studies, Terence Hines of Pace University wrote that, yes, Einstein’s brain was different from control brains, “But who would have expected anything else? Human brains differ. The differences that were found were hardly ones that would suggest superior intelligence, although the authors desperately tried to spin their results to make it appear so.” Isn’t it inspiring to think that all it takes to make world-changing discoveries is a brain that’s totally normal?

8. The Woman Who Doesn’t Feel Fear

Doctors call her SM: A woman with a rare genetic condition called Urbach-Wiethe disease, which has calcified areas of her brain called the amygdalae, and led her to virtually lose her ability to feel fear. Once, when held at knife point in a park, SM dared her assailant to cut her; unnerved, the man let her go, at which point she walked away. She didn’t call the police, and returned to the park the next day. She had a similar reaction to other incidents that would have provoked fear in others, often experiences curiosity of things that others might be afraid of, like snakes and spiders, and stands extremely close to total strangers.

Through SM—whom scientists have been studying for nearly three decades, and whose identity has not been revealed for her protection—we have learned much about how the amygdala are involved in processing fear. As Ed Yong wrote in National Geographic in 2010, clinical psychologist Justin Feinstein believes that the amygdalae act as a go-between for “parts of the brain that sense things in the environment, and those in the brainstem that initiate fearful actions. Damage to the amygdala breaks the chain between seeing something scary and acting on it.” SM’s lack of fear leads her into situations she should avoid, which, Feinstein writes, “highlight[s] the indispensable role that the amygdala plays in promoting survival by compelling the organism away from danger.”

Researchers continue to learn from SM: In 2013, for example, scientists discovered that, under certain circumstances, she actually can feel fear. In an experiment, researchers had her inhale a large (but not lethal) concentration of CO2 through a mask. They expected her to shrug it off, but according to neuroscientist John Wemmie, “we were pretty shocked when exactly the opposite happened.” During the experiment, she cried out for help, and when asked later what emotion she had experienced, she told them, "Panic mostly, 'cause I didn't know what the hell was going on.” In a paper about the experiment, the researchers said, “Contrary to our hypothesis, and adding an important clarification to the widely held belief that the amygdala is essential for fear, these results indicate that the amygdala is not required for fear and panic evoked by CO2 inhalation.” Since then, more studies have been done, giving us a greater insight into how the brain processes fear.

9. The Face on Mars

When Viking 1 landed on Mars on July 20, 1976, it made history as the first American lander to successfully touch down on the red planet and return images. Meanwhile, the companion orbiter was taking pictures for possible Viking 2 landing sites—and launched a thousand conspiracy theories in the process. Some photos appeared to show a giant face on the planet’s Cydonia region. It was dubbed “The Face on Mars.”

At the time, NASA released the photo and noted that the “face” was probably a trick of shadows and computer errors. (Face pareidolia, the human tendency to see faces in everything from food to clouds to cars, may have also been a factor.) “The authors reasoned it would be a good way to engage the public and attract attention to Mars,” a NASA piece called “Unmasking the Face on Mars” explained. A couple of years later, two computer programmers working for NASA on a project stumbled across the photo and determined (despite their total lack of space experience) that the “face” could not have occurred naturally. It was this interpretation that captured public imagination, leading to tons of speculation that NASA was covering up alien life on the planet, and even the idea that the face was evidence of an ancient civilization that had since died out (which, again, NASA was supposedly covering up).

Even after later photographs proved NASA’s hunch to be true, and that the “face” is just a mesa common on the Martian surface, its legend lives on in pop culture: It’s been featured in TV shows like The X-Files, Futurama, and Phineas and Ferb; the movie Mission to Mars; books, comic books, and video games; and even in music.

10. The Fowler Phrenology Bust

In the late 1700s, German physiologist Franz Joseph Gall began asking his friends and family if he could examine their heads. He had a theory: that the skull was shaped by the development of areas of the brain, and that by feeling the skull, you could tell which areas of the brain were most developed—and, from that, determine everything you needed to know about a person’s character, proclivities, and mental abilities. “The more developed the trait,” Minna Scherlinder Morse wrote at Smithsonian in 1997, “the larger the organ, and the larger a protrusion it formed in the skull.” Gall called it “cranioscopy” and “organology,” but the name that stuck came from Gall’s assistant, Johann Gaspar Spurzheim. He dubbed the practice phrenology (though he didn't coin that term).

Unfortunately, there wasn’t much science behind Gall’s theory. According to Britannica, he would pick—with no evidence—the location of a particular characteristic in the brain and skull, then look at the heads of friends he believed had that characteristic, for a distinctive feature to identify it, which he then measured. (As neuroscientists put it in a 2018 issue of Cortex, “The methodology behind phrenology was dubious even by the standards of the early 19th century.”) His methods became even more problematic when he began to study inmates at prisons and asylums; there, he looked for traits that were specifically criminal, identifying areas of the brain that he said were associated with things like murder and theft (which were later renamed by Spurzheim “to align with more moral and religious considerations,” according to Britannica).

Gall and Spurzheim began to lecture about their ideas across Europe. Gall died in 1828; in 1832, Spurzheim came to the U.S. for a lecture tour, and phrenology took off. It was helped along by brothers Orson and Lorenzo Fowler, who began speaking about phrenology all over the country in the years after Spurzheim died three months into his U.S. tour. The Fowlers created a popular phrenology journal and, of course, their famous phrenology bust, showing where certain characteristics were said to be located in the brain.

Phrenology had a huge effect on American life and culture: According to Morse, “Employers advertised for workers with particular phrenological profiles ... Women began changing their hairstyles to show off their more flattering phrenological features.” It popped up in the works of Herman Melville and Edgar Allan Poe, and everyone from P.T. Barnum to Sarah Bernhardt sat to have their skulls examined. And as a “science,” it was used to promote ideas that we would today consider racist, sexist, and ableist. “The phrenological approach therefore relied on tenuous and perhaps even offensive stereotypes about different social groups,” the neuroscientists wrote in Cortex. Phrenological analysis also justified things like the removal of Native Americans from their ancestral lands.

Phrenology had largely fallen out of favor by the early 1900s, but it wasn’t total bunk: Gall was correct that certain functions are localized to particular areas of the brain, which Paul Broca demonstrated in the 1860s, and as early as 1929, some were linking phrenology to the development of psychology. As Harriet Dempsey-Jones writes in The Conversation, phrenology “was among one of the earlier disciplines to recognize that different parts of the brain have different functions. Sadly, the phrenologists didn’t quite nail what the actual functions were: focusing largely on the brain as the seat of the mind (governing attitudes, predispositions, etc.) rather than the more fundamental functions we know it to control today: motor, language, cognition, perception, and so forth.”

Today, if and when you think about phrenology, the Fowler bust likely comes to mind, thanks to the fact that it’s sold as a quirky piece of home decor everywhere from Amazon to Etsy to Wayfair. Many who buy it are probably unaware of the many ways in which the concept behind it affected the world.

11. The Ice Age Portrait of a Woman

The oldest known portrait of a woman is carved out of ivory and depicts a head and face with “absolutely individual characteristics,” according to Jill Cook, a curator at the British Museum. “She has one beautifully engraved eye; on the other, the lid comes over and there's just a slit. ... And she has a little dimple in her chin: this is an image of a real, living woman.” The 26,000-year-old work of art, found in the 1920s at a site near Dolní Věstonice in what is now Czechia, measures just under 2 inches and likely took hundreds of hours to produce, suggesting that “this was a society that valued their producers.”

While modern humans tend to think of our distant ancestors as primitive, art like this portrait shows that they had the sophistication necessary to create art that was both realistic and abstract, along with a culture and capability to appreciate art—and perhaps even something akin to an art world. “Most people looking at art are looking at the five minutes to midnight—the art of the last 500 years,” Cook told The Guardian. “We have been used to separating work like this off by that horrible word ‘prehistory.’ It's a word that tends to bring the shutters down, but this is the deep history of us.”

12. Engis 2, Gibraltar 1, and Neanderthal 1

In 1829, a child’s skull was found in Awirs Cave in Engis, Belgium. Nearly 20 years later, British Navy officer Edmund Flint came across an adult skull in Forbes' Quarry, Gibraltar. Apparently, scientists didn’t think much about either specimen. But that all changed a few years after a similar adult skull was discovered by quarry workers in Feldhofer Cave—located in Germany’s Neander Valley (or, in the German of the time, Neanderthal)—in 1856. Scientists realized they were dealing with the fossilized remains of human ancestors, and in 1864, named that German specimen Homo neanderthalensis; later, they realized the first two skulls were Neanderthals, too, making those two skulls among the first fossilized human remains ever found. All three skulls—dubbed Engis 2, Gibraltar 1, and Neanderthal 1, respectively—were discovered before Charles Darwin published On the Origin of Species (though Engis 2 and Gibraltar 1 weren’t identified as Neanderthal until later), and not only spurred debate about what it was to be human (scientists of the era considered the skulls to be too ape-like for the Neanderthals to be intelligent; today, we know differently), but as one contemporary newspaper noted, it made us rethink the timeline of human development, which is still an active area of study today.

13. The Man With the "Missing" Brain

We’re taught in school that a brain is pretty essential to being a living, breathing, functioning human being. So French doctors must have experienced quite a shock when they examined a French civil servant—who had come to them complaining of weakness in the leg—and saw, in the words of Nature, “a huge pocket of fluid where most of his brain ought to be” when they performed CT and MRI scans.

The man was a 44-year-old married father of two who was living an entirely normal life. But as an infant, he had been treated for hydrocephalus, cerebrospinal fluid buildup in the brain that, according to the Mayo Clinic, “increases the size of the ventricles and puts pressure on the brain.” The man was treated for hydrocephalus again at 14, but it wasn’t until he returned 30 years later that doctors realized how severe the issue had gotten: One of the researchers told New Scientist that he appeared to have a 50 to 75 percent reduction in the volume of his brain.

Despite appearances, however, the man's brain wasn’t actually gone—as Yale School of Medicine’s Dr. Steven Novella wrote on his blog in 2016, what actually happened was that, as the pressure slowly built up over years and years, his brain was physically compressed: “His brain is mostly all still there, just pressed into a thin cortical rim,” Novella writes. “He did not lose 90 percent of his brain mass ... There has probably been some atrophy over the years due to the chronic pressure, but not much.”

The civil servant's case, and others like it, show that the brain is far more adaptable than we realized—capable of “deal[ing] with something which you think should not be compatible with life,” as pediatric brain defect specialist Dr. Max Muenke put it to Reuters.

14. The Olmec Heads

In 1868, a strange report appeared in the pages of the Veracruz newspaper El Semanario Ilustrado [PDF]. Penned by José María Melgar y Serrano, it described the existence of a massive stone head with a human face that had been found in Tres Zapotes in the mid-1800s. When ethnologist and archaeologist Matthew Stirling later excavated and wrote about the head in National Geographic in the 1940s, it became clear that it was going to rewrite history. Stirling himself called the discovery “among the most significant in the history of American archaeology.”

The head (and the others like it, discovered later) was created by a previously unknown civilization, which historians dubbed Olmec. They lived in what is now Southern Mexico, and as Lizzie Wade wrote in Archaeology, “emerged by 1200 [BCE] as one of the first societies in Mesoamerica organized into a complex social and political hierarchy.”

In addition to the heads—which may be intended to depict specific individuals, probably the culture’s rulers—archaeologists unearthed other massive works like statues, thrones, and pyramids, as well as ceramics and intricate jade and serpentine carvings. According to TIME, “Art historians and archaeologists agree … that the Olmec produced the earliest sophisticated art in Mesoamerica.”

Much about the heads is a mystery: So far, around 17 Olmec heads have been discovered at sites across Mexico. Carved from volcanic basalt, they’re between 5 to 12 feet tall and weigh up to 60 tons. No one knows how the massive stones were moved from quarries to where they were found—according to anthropologist David C. Grove, when documentary filmmakers attempted to re-create the process with their own Olmec head, “the television company was forced to hire a large truck and crane to move the boulder to a riverside destination. Unfortunately, that new location had its own set of problems, including the fact that the river’s current was deemed too swift for managing the large wooden raft they intended to use to transport their boulder. Thus another compromise had to be made, and the scene of the launch was shifted to the smoother waters of a nearby lagoon.” That didn't work either: The head was so heavy it that caused the raft to sink into the bank of the lagoon. When the production tied tow lines to the raft and attached them to motorboats—technology that obviously wasn't available to the Olmec—to try to yank the raft out of the mud, that also failed, further adding to the mystery.

15. The 3.8-million-year-old MRD Cranium

Everyone knows—and loves—Lucy, the Australopithecus afarensis hominid whose discovery in 1974 “changed our understanding of human evolution,” according to the BBC. But in 2016, a nearly complete cranium called the MRD cranium came along and recast what we thought we already knew.

Discovered in 1965, Australopithecus anamensis was once thought to have lived before A. afarensis, and that A. afarensis evolved from A. anamensis. But now, some researchers believe that comparisons between the MRD cranium and a fragment of forehead that may have belonged to an A. afarensis prove that the two species of hominids lived side by side for as many as 100,000 years. For other scientists, however, the jury is still out—but regardless of when or with whom A. anamensis lived, the finding shows that the history of human evolution is much more diverse, and complex, than we once thought.

16. The Man Without a Memory

In 1953, a man named Henry Molaison was suffering from severe epileptic seizures. In an attempt to control them, he had experimental surgery to remove part of his brain. The procedure worked, but it had an unintended side effect: Molaison’s memory was essentially non-existent. He experienced no loss of intelligence, and could still perceive the world normally, but almost as soon as something happened, he forgot it. His life, he told the researchers studying him, was “like waking from a dream ... every day is alone in itself.” He was able to pick up new motor skills, however, which indicated that there are multiple types of memory, and he only lost one.

Molaison spent much of his life in a care facility, living what one researcher called “a life of quiet confusion, never knowing exactly how old he is (he guesses maybe 30 and is always surprised by his reflection in the mirror) and reliving his grief over the death of his mother every time he hears about it. Though he does not recall his operation, he knows that there is something wrong with his memory and has adopted a philosophical stance on his problems: ‘It does get me upset, but I always say to myself, what is to be is to be. That's the way I always figure it now.’”

Molaison—who would be dubbed “Patient H.M.”—wasn’t the first person to experience memory loss after surgery to have part of his brain removed, but his case was the most severe, and studying him made scientists rethink what they thought they knew about memory and how it works. As Larry Squire writes in a 2009 issue of Neuron, “the early descriptions of H.M. inaugurated the modern era of memory research.” Researchers studied him until his death in 2008, establishing, according to Squire, “fundamental principles about how memory functions are organized in the brain.”

17. A Trephined Skull From Peru

Many scientists in the 19th century had dim and racist views of ancient, non-white cultures, deeming them exceedingly primitive in pretty much all ways of life. So you can imagine how surprised the audience at the New York Academy of Medicine was when explorer and archaeologist Ephraim George Squier presented a skull from an Inca burial ground that showed evidence not just of trephination—a surgical technique that involves making a hole into the skull—but that the person having their skull opened actually survived the surgery. They, along with the Anthropological Society of Paris, flat-out refused to believe it, despite the fact that preeminent skull expert Paul Broca co-signed Squier’s conclusion. As Charles G. Gross writes in A Hole in the Head: More Tales in the History of Neuroscience, “Aside from the racism characteristic of the time, the skepticism was fueled by the fact that in the very best hospitals of the day, the survival rate from trephining (and many other operations) rarely reached 10 percent, and thus the operation was viewed as one of the most perilous surgical procedures. … [the audiences were] dubious that Indians could have carried out this difficult surgery successfully.”

Any question that it was possible was put to rest a few years later, when skulls dating back to the Neolithic period were found in central France. Since then, trephined skulls dating back thousands of years have been found all over the world (including in the Americas, where it was practiced until the Spanish arrived). Scientists are still debating why our ancient ancestors performed trephining—was it to alleviate pressure in the brain because of an injury, to banish evil spirits, or performed as part of a ritual?—but the fact remains that the discovery of trephined skulls revealed earlier peoples to be more advanced than the primitive picture of them at the time allowed.

18. The Oldest Homo Sapiens Skull (Yet) Discovered

In 2017, Nature published a study in which researchers said they had found skull fragments at Jebel Irhoud in Morocco that literally rewrites the history of Homo sapiens. According to the researchers, finds like this skull—which was discovered by miners in the 1960s—not only moves the date of the origin of our species back 100,000 years, to 300,000 years ago, but also shows that humans likely evolved in more areas of the African continent than previously thought.

“Until now, the common wisdom was that our species emerged probably rather quickly somewhere in a ‘Garden of Eden’ that was located most likely in sub-Saharan Africa,” one of the study’s authors, Jean-Jacques Hublin, told Nature. “I would say the Garden of Eden in Africa is probably Africa—and it’s a big, big garden.”

Because there are differences between this skull and the skull of modern H. sapiens (the Jebel Irhoud skull is more elongated, for example), not all scientists agree that this skull is, in fact, H. sapiens—but if it is, it suggests a more diverse origin for our species than we were previously aware of.

19. Flinders Petrie

You might have heard of Jeremy Bentham, the philosopher who decreed that his body be turned into an auto-icon upon his death, and whose head preservation was botched so terribly that his body's handlers opted to replace it with a wax replica. But Bentham’s severed head—which has been displayed with his auto-icon off and on throughout the years—isn’t the only one associated with the University College London. The other belongs to archaeologist William Matthew Flinders Petrie, for whom the UCL’s Petrie Museum of Egyptian Archaeology is named.

Upon his death in Jerusalem in 1942, Petrie (who was buried in the city's Protestant cemetery) was decapitated; later, after the war, his head was sent back to the Royal College of Surgeons in London, where he hoped his skull would serve “as a specimen of a typical British skull,” as a friend wrote in a 1944 letter, and be the subject of scientific study.

Bequeathing one’s head to become part of a museum’s collection might seem bizarre, but it begins to make more sense the more you know about Petrie. In addition to being a prominent archaeologist, he was also a proponent of eugenics, a movement of the 19th and 20th centuries that proposed the improvement of the human race through the selective breeding of supposedly "ideal" characteristics (called "positive" eugenics) and the removal of what were deemed "undesirable" characteristics ("negative" eugenics). Petrie collected the heads (and other remains) from dead bodies in the Middle East to lend historical and statistical “evidence” to his ideas and those of the prominent eugenicists Francis Galton (who invented the movement) and Karl Pearson. “Petrie is easily recognizable as a vital figure in the history of archaeology,” Kathleen L. Sheppard wrote in a 2010 issue of Bulletin of the History of Archaeology, “but his work in eugenics has … been mostly overlooked.”

Petrie first collaborated with Galton in the 1880s, snapping photos of different races portrayed in ancient art; the resulting book, Racial Photographs From the Egyptian Monuments, was published in 1887. Thus began a close working relationship that later included Pearson; Petrie collected, measured, and sent thousands of skulls and other remains from the Middle East back to UCL’s labs in London so that the Galton Laboratory would have the necessary data to build “a useful working database for biometric racial comparison,” in Sheppard’s words. “Petrie was able to lend the authority of historical evidence to the eugenics movement. His historical and anthropological arguments allowed Galton to make his claims more authoritative by combining quantitative data with historical trends in civilization and heredity.” In addition to his work with Galton and Pearson, Petrie also wrote two books pushing eugenics: Janus in Modern Life and The Revolutions of Civilisation, in which he argued for things like sterilization or abstinence and state approval of marriages.

These ideas were hugely influential, both in Britain and in the U.S., well into the 20th century, and the consequences were terrible. “[Eugenics] took off probably more in the States than it did here in the UK,” Subhadra Das, a curator at UCL and a historian of eugenics, told Science Focus. “You had people—including people with learning disabilities, but also lots of non-white people in the United States—being subjected to sterilization without their consent because the goal there was to make sure they didn’t pass their genes on to the next generation.” Later, the Nazis put eugenic practices in place to horrific effect in the 1930s and World War II.

Petrie, a man who collected human skulls for scientific analysis, hoped that his own skull would take its place in osteological collections for study, too. (According to Sara Perry and Debbie Challis in a paper published in Interdisciplinary Science Reviews, he did not think his head was an “ideal specimen,” as others would later claim, and stories about its travels in a hat box appear to be apocryphal.) But when his head finally made its way to London, it was without much documentation, and to a scientific collection devastated by the Blitz. The head was never defleshed and remains stored in a glass jar in the collection of the Royal College of Surgeons. It doesn’t seem that any study of it has ever taken place.

20. The Heads and Brains Donated to Science

It’s hard to quantify just how much we’ve learned from the heads and brains donated to science, but it’s safe to say it’s a lot. Not only do students dissect them (and cadavers as a whole) to learn anatomy, but plastic surgeons practice on them to learn how to properly perform techniques, and scientists study donated brains to discover all they can about diseases like Alzheimer’s, and Parkinson’s (as the National Institute on Aging notes on its website, “One donated brain can make a huge impact, potentially providing information for hundreds of studies”). Here’s to those unnamed heads who have gone under the knife in the name of science.